Multigrid Method for Finite Element¶

-->Please access the video via one of the following two ways

A one dimensional problem¶

Two-point boundary problems and finite element discretization

Define the functional space

Given any \(f:[0,1] \rightarrow R\), consider

Find \(u \in V\) such that

Theorem 12

Problem (49) is equivalent to: Find \(u \in V\) such that

Proof. For any \(v \in V, t \in R\), let \(g(t)=J(u+t v)\). Since \(u=\) \(\arg \min _{v \in V} J(v)\) means \(g(t) \geq g(0)\). Hence, for any \(v \in V, 0\) is the global minimum of the function \(g(t)\). Therefore \(g^{\prime}(0)=0\) implies

By integration by parts, which is equivalent to

It can be proved that the above identity holds if and only if \(-u^{\prime \prime}=f\) for all \(x \in(0,1)\). Namely \(u\) satisfies (54). \(\square\)

Let \(V_{h}\) be finite element space and \(\left\{\varphi_{1}, \varphi_{2}, \cdots \varphi_{n}\right\}\) be a nodal basis of the \(V_{h}\). Let \(\left\{\psi_{1}, \psi_{2}, \cdots, \psi_{n}\right\}\) be a dual basis of \(\left\{\varphi_{1}, \varphi_{2}, \cdots \varphi_{n}\right\}\), namely \(\left(\varphi_{i}, \psi_{j}\right)=\delta_{i j}\).

Let

then

and

At the same time, let \(u_{h}=\sum_{i=1}^{n} \mu_{i} \varphi_{i}\),

And \(u_{h}\) solves the problem: Find \(u_{h} \in V_{h}\)

where

which is equivalent to solving \(\underline{A} \mu=b\), where \(\underline{A}=\left(a_{i j}\right)_{i j}^{n}\) and \(a_{i j}=\) \(a\left(\varphi_{j}, \varphi_{i}\right)\) and \(b_{i}=\int_{0}^{1} f \varphi_{i} d x .\) Namely

Which can be rewritten as

Using the convolution notation, (52) can be written as

where \(A=\frac{1}{h}[-1,2,-1]\).

Spectrum properties of \(A *\)¶

We recall that \(\lambda\) is an eigenvalue of \(A\) and and \(\xi \in R^{N} \backslash\{0\}\) is a corresponding eigenvector if

Because of the special structure of \(A\), all the \(N\) eigenvalues, \(\lambda_{k}\), and the corresponding eigenvectors, \(\xi^{k}=\left(\xi_{j}^{k}\right)\), of \(A\) can be obtained, for \(1 \leq k \leq N\), as follows:

Indeed, the relation \(A \xi^{k}=\lambda_{k} \xi^{k}\) can be verified by following elementary trigonometric identities:

\(-\sin \frac{k(j-1) \pi}{N+1}+2 \sin \frac{k j \pi}{N+1}-\sin \frac{k(j+1) \pi}{N+1}=4 \sin ^{2} \frac{k \pi}{2(N+1)} \sin \frac{k j \pi}{N+1}\),

where \(1 \leq j \leq N\). Actually, it is not very difficult to derive these formula directly (see appendix A).

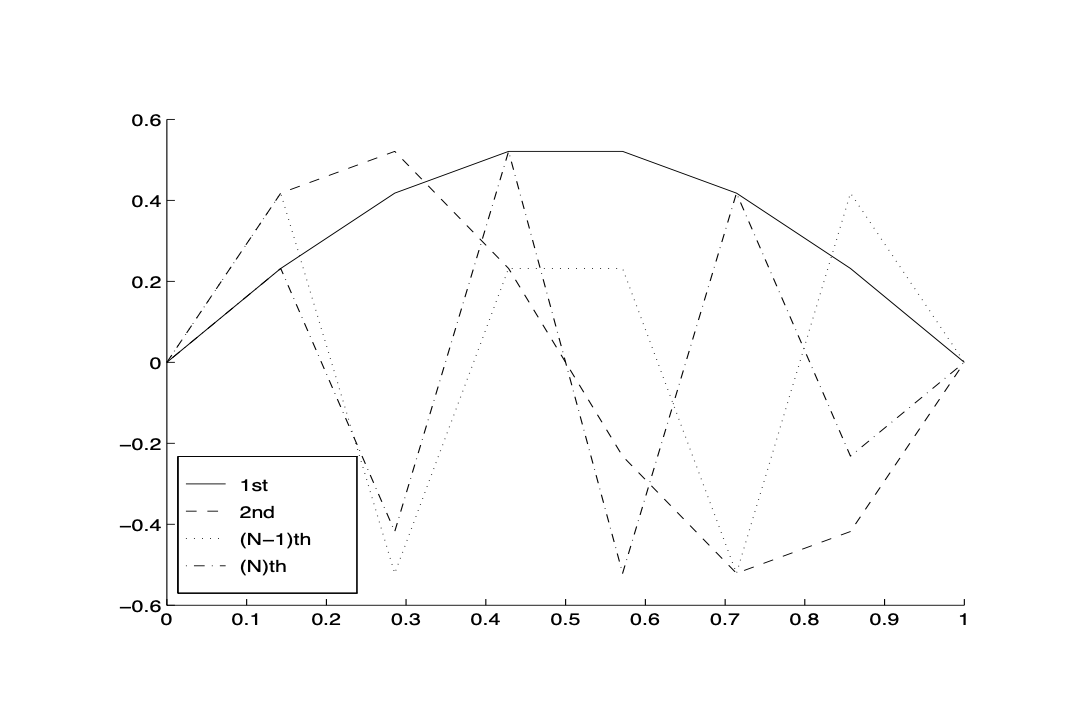

To understand the behavior of these eigenvectors, let us take \(N=6\) and plot the linear interpolations of all these 6 eigenvectors. We immediately observe that each vector \(\xi^{k}\) corresponds to a given frequency, and larger \(k\) corresponds to higher frequency. As \(\lambda_{k}\) is increasing with respect to \(k\), we can then say that a larger eigenvalue of \(A\) corresponds to a higher frequency eigenvector. From a numerical point of view, we say a relatively low frequency vector is relatively smoother whereas a high frequency vector is nonsmooth.

Fig. 18 The eigenvectors¶

We note that the set of eigenvectors \(\xi^{k}=\left(\xi_{j}^{k}\right)\) forms an orthogonal basis of \(R^{N}\). (This fact can be checked directly, or it also follows from the fact that the matrix \(A\) is symmetric and has \(N\) distinctive eigenvalues.) Therefore, any \(\xi \in R^{N}\) can be expanded in terms of these eigenvectors:

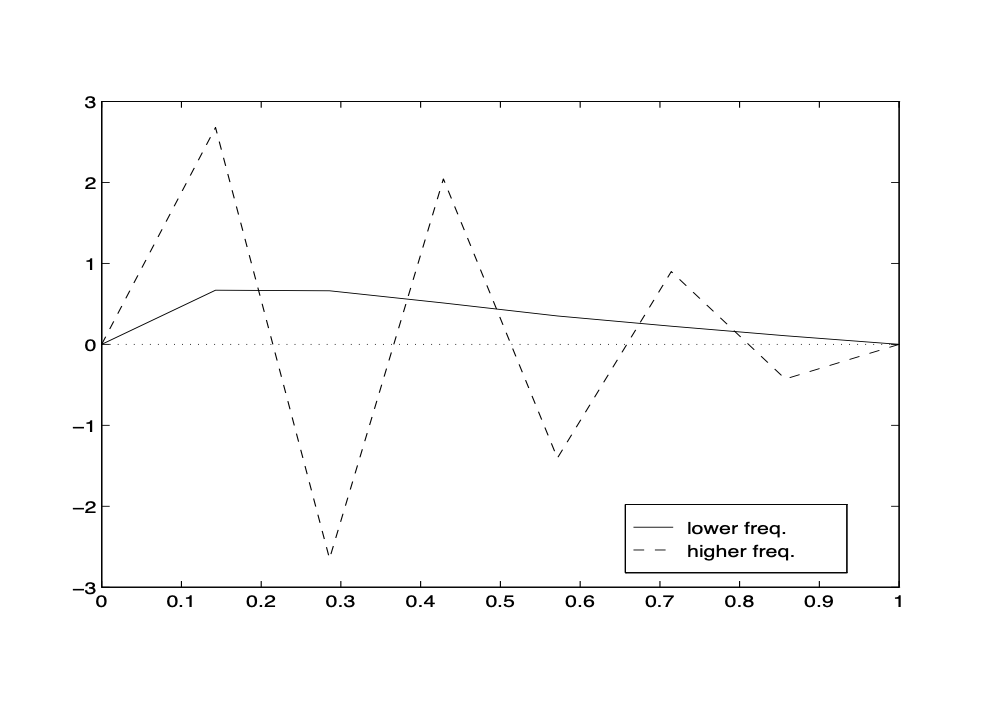

This type of expansion is often called discrete Fourier expansion. The smoothness of the vector \(\xi\) has a lot to do with the relative size of the coefficients \(\alpha_{k}\). To see this numerically, let us again take \(N=4\) and consider the following two vectors

Fig. 19 Plots of \(\xi\) and \(\sigma . \xi\)-solid line; \(\sigma\) dashed line¶

The first vector \(\xi\) has larger coefficients in front of lower frequencies whereas the second vector \(\sigma\) has larger coefficients in front of higher frequencies. From Figure Fig. 19, it is easy to see how the smoothness of a vector depends on the relative size of its Fourier coefficients. We conclude that, in general, a vector with relatively small Fourier coefficients in front of the higher frequencies is relatively smooth and conversely, a vector with relatively large Fourier coefficients in front of the higher frequencies is relatively rough or nonsmooth.

Gradient descent method¶

Noting that \(\nabla I(v)=A * v-b\) and applying the gradient descent method to solve problem (50), we obtain

After \(v\) iterations of gradient descent method, we denote the solution as \(u_{h}^{\nu}\).

Consider the finite element discretization of Poisson equation in 1D: One very simple iterative method for (51) is the following gradient descent method

or, for \(j=1: N\),

where \(\eta>0\) is a positive parameter named learning rate.

It is not so difficult to properly choose \(\eta\) so that the above iterative scheme converges, namely for any initial guess \(\mu^{0}\), the sequence \(\left(\mu^{\prime} l-\right.\) 1)) generated by the above iteration converges to the exact solution \(\mu\) of (51):

Note that

we get

Or

As we known,

if and only if \(\rho(I-\eta A *)<1\). Here \(\rho(B)\) is the spectral radius of matrix \(B\). However, \(\rho(I-\eta A *)<1\) if and only if

Thus, a necessary and sufficient condition for the convergence is the following

It is easy to see that (for example, \(4 / h\) is an upper bound of its row sums)

Therefore it is reasonable to make the following choice:

and the resulting algorithm is

In the rest of this section, unless otherwise noted, we shall choose \(\eta\) as above for simplicity.

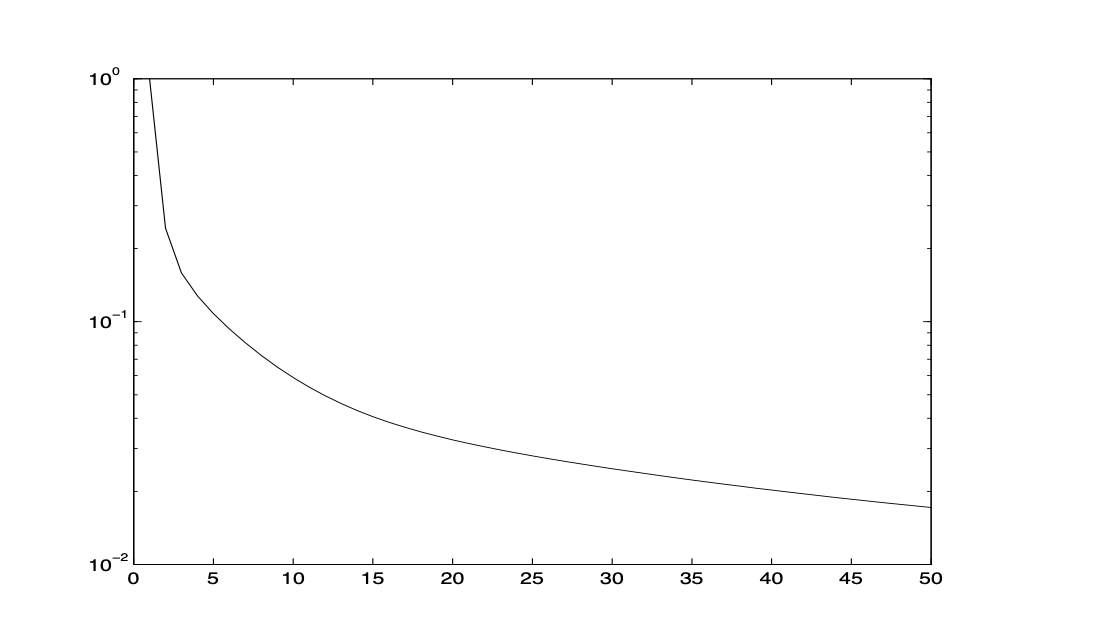

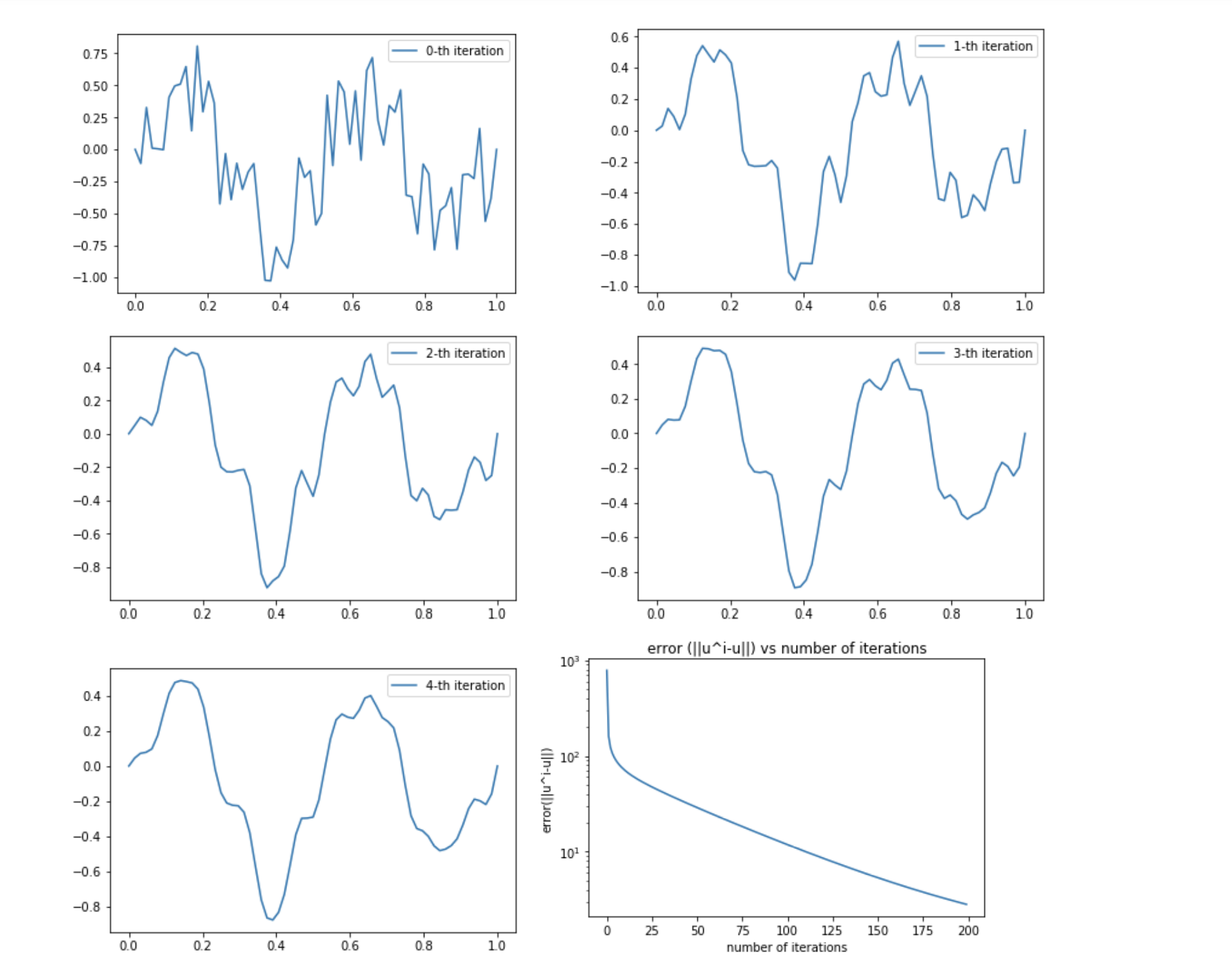

On Figure Fig. 20 the convergence history plot of the above gradient descent iterative method for typical application is shown. As we see, this iterative scheme converges very slowly.

Fig. 20 A picture on the GD method convergence history¶

Our main goal is to find a way to speed up such kind of rather slowly convergent iterative scheme. To do that, we need to study its convergent property in more microscopic level. First of all, let us now take a careful look at the convergence history picture and make the following observation:

Observation 1. The scheme converges rather fast in the very beginning but then slows down after a few steps. Overall, the method converges very slowly.

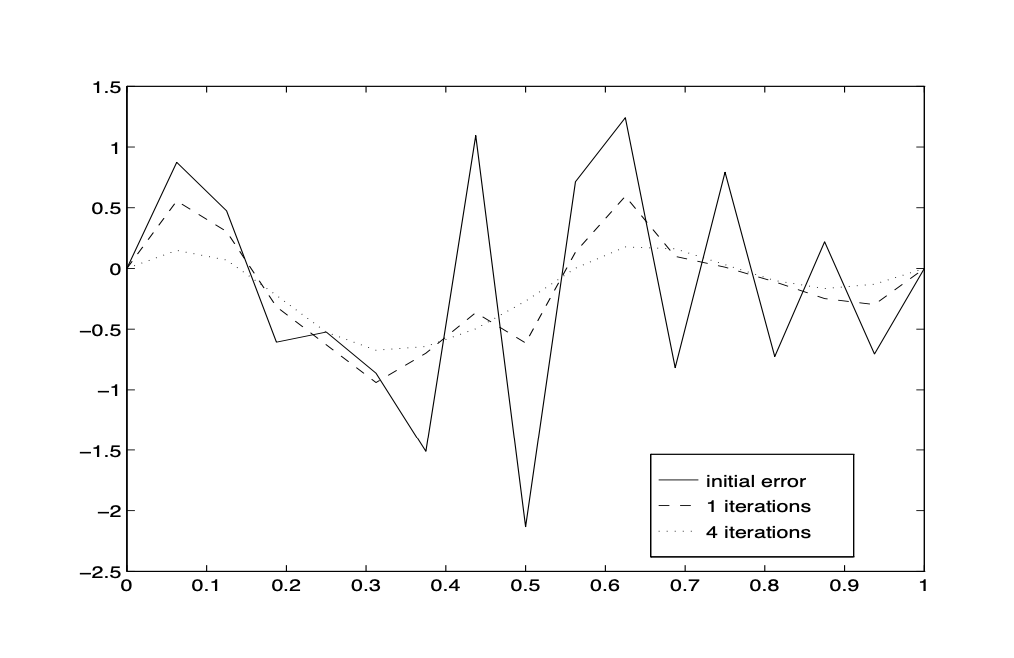

To further understand this phenomenon, let us plot the detailed pictures of the error functions in the first few iterations. After a careful look at these pictures, we have the following observation

Observation 2. The scheme not only converges fast in the first few steps, but also smooth out the error function very quickly.

In other words, the error function becomes a much smoother function after a few such simple iterations. This property of the iterative scheme is naturally called a smoothing property and an iterative scheme having this smoothing property is called a smoother.

Fig. 21 The smoothing effect of the Richardson method¶

The above two observations, especially the second one, concern the most important property of the simple gradient descent method that we can take advantage to get a much faster algorithm.

Example . Let \(f(x)=\pi^{2} \sin \pi x\). Consider

The true solution \(u=\sin \pi x\). Given the partition with the grid points \(x_{i}=\frac{i}{n+1}, i=0,1, \cdots, n+1\), then by finite element discretization, we obtain

Use gradient descent method to solve (55) with random initial guess \(\mu^{0}\). Plot \(\mu^{\ell}-\mu^{0}\) and \(\left\|\mu^{\ell}-\mu^{0}\right\|\) for \(\ell=1,2,3\).

The gradient descent method can be written in terms of \(S_{0}^{\ell}: \mathbb{R}^{N_{t}} \rightarrow\) \(\mathbb{R}^{N_{t}}\) satisfying

for equation (52) with initial guess zero. If we apply this method twice, then

with element-wise form

Then by the definition of convolution, we have

with

and

Hence we denote \(S_{0}^{\ell}\) or \(S_{1}^{\ell}\) as \(S^{\ell}\).

Now for any given \(\mu^{(0)}=\tilde{\mu}^{(0)}\),

we obtain \(\mu^{(j)}=\tilde{\mu}^{(j)}\) which means one step \(S_{1}^{\ell}\) is equivalent to two steps of \(S_{0}^{\ell}\).

Convergence and smoothing properties of GD¶

Because of the extraordinary importance of this smoothing property, we shall now try to give some simple theoretical analysis. To do this, we make use of the eigenvalues and eigenvectors of the matrix \(A\).

Fourier analysis for the gradient descent method

Our earlier numerical experiments indicate that the gradient descent method has a smoothing property. Based on our understanding of the relation between the smoothness and the size of Fourier coefficients, we can imagine that this smoothing property can be analyzed using the discrete Fourier expansion.

Let \(\mu\) be the exact solution of (51) and \(\mu^{(l)}\) the result of \(l-t h\) iteration from the gradient descent method (53). Then

Consider the Fourier expansion of the initial error:

Then

Note that \(\eta=h / 4\) and for any polynomial \(p\)

we get

where

Note that

implies

which, for \(k\) close to \(N\), for example \(k=N\), approaches to 0 very rapidly when \(m \rightarrow \infty\). This means that high frequency components get damped very quickly. However, for \(k\) far away from \(N\), for example \(k=1, \alpha_{k}^{(m)}\) approaches to 0 very slowly when \(m \rightarrow \infty\).

This simple analysis clearly justifies the smoothing property that has been observed by numerical experiments.

Fig. 22 \(u^{0}, u^{1}, u^{2}, u^{3}, u^{4}\)¶

An intuitive discussion

Both the gradient decsent and Gauss-Seidel methods are oftentimes called local relaxation methods. This name refers to the fact that what both of these algorithms do are just trying to correct the residual vector locally at one nodal point at a time (recall that \(\mu_{j} \approx u\left(x_{j}\right)\) ). This local relaxation procedure is then effective to the error components that are local in nature. Incidently, the nonsmooth or high frequency component which oscillates across one or few grid points have a strong local feature. Therefore, it is not surprising the both gradient descent and Gauss-Seidel iteration can damp out these nonsmooth components more easily. These methods are very inefficient for relatively smoother components in the error since a smoother function is more globally related in nature.